Generative AI for Education: Safeguarding Privacy and Promoting Accessibility in Schools

November 21, 2024The rapid rise of generative AI (GenAI) is transforming industries, including education, by enabling personalized learning experiences, streamlining administrative tasks, and creating dynamic student engagement. Numerous tools provide tailored learning resources and interactive content, allowing students to explore material in innovative ways. However, this integration raises significant concerns around data privacy and accessibility, as AI models often rely on large datasets containing sensitive information. Safeguarding this data becomes critical as schools adopt these technologies.

To ensure responsible AI use in education, institutions must align their AI tools with ethical values, compliance standards, and accessibility requirements. Addressing issues such as academic integrity and equitable access is essential to prevent barriers for students from diverse backgrounds. Establishing robust frameworks, including policies on data privacy and strategies for inclusive use, will enable schools to balance innovation with the protection of student rights. This dual approach is vital for maximizing GenAI’s potential while mitigating its challenges.

Understanding Generative AI: Redefining Technology

Generative Artificial Intelligence (AI) is a specialized branch of AI designed to create new content by leveraging deep learning models trained on vast datasets. Unlike traditional AI, which focuses on identifying patterns or making predictions, generative AI produces outputs that resemble human creativity, such as images, text, music, and even videos. This innovation is powered by advanced algorithms and architectures like Large Language Models (LLMs), enabling machines to respond to natural language prompts with remarkable accuracy and sophistication.

Prominent examples of generative AI include ChatGPT, Google Bard, and DALL-E, which are widely adopted across industries for tasks ranging from writing and image generation to code development. These systems are trained on extensive data sources, such as books, articles, and user-generated content, allowing them to mimic human-like responses. However, they generate content based on patterns in their training data rather than true understanding, which can sometimes result in inaccurate or fabricated outputs, often referred to as "hallucinations." Despite these limitations, generative AI continues to evolve, offering innovative solutions while prompting discussions about ethical considerations, data privacy, and its broader societal impact.

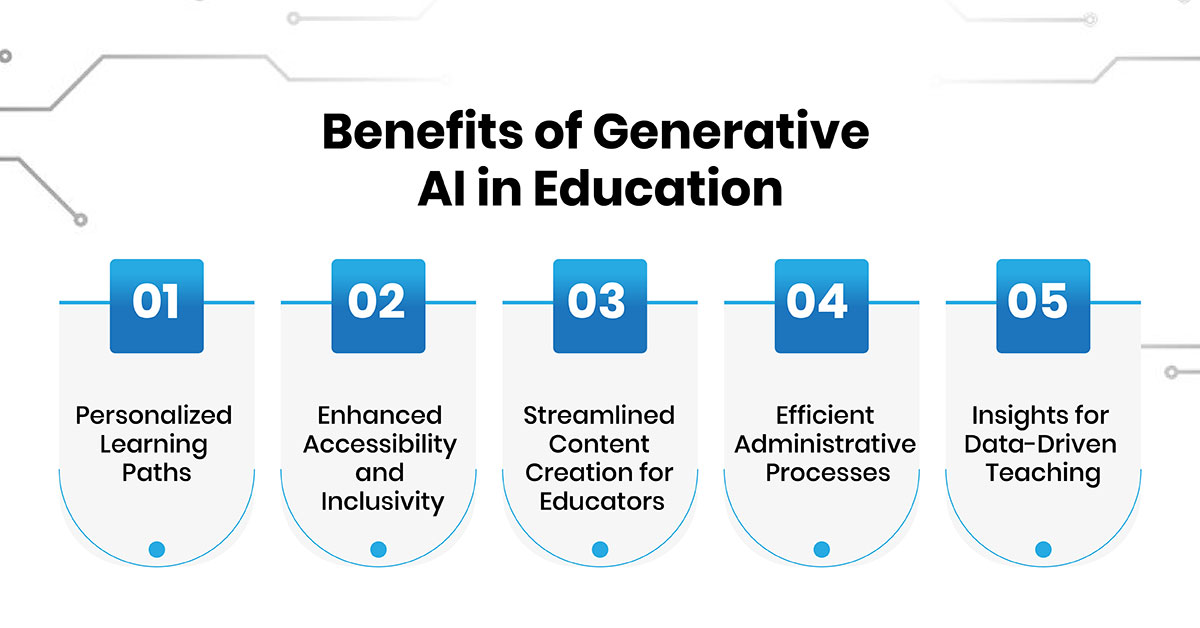

Benefits of Generative AI in Education

Generative Artificial Intelligence (AI) is revolutionizing education by offering tools and capabilities that enhance learning, teaching, and administrative processes. Below are the key benefits of generative AI in educational contexts:

-

1. Personalized Learning Paths

Generative AI generates educational content to individual student needs by analyzing data such as learning styles, performance, and preferences. This customization ensures students’ progress at a comfortable pace, enhancing their engagement and comprehension. -

2. Enhanced Accessibility and Inclusivity

AI-driven tools break down barriers to education for students with disabilities and those requiring additional support. Real-time transcription, language translation, and adaptive content ensure that learners from diverse backgrounds have equitable access to resources. -

3. Streamlined Content Creation for Educators

Generative AI assists teachers by automating the creation of lesson plans, quizzes, sample problems, and writing scenarios. It can also help draft course policies, syllabi, and learning objectives, saving educators time for more meaningful interactions with students. -

4. Efficient Administrative Processes

Beyond teaching, generative AI simplifies administrative tasks like grading, managing schedules, and analyzing student performance data. These efficiencies allow educators and institutions to focus more on improving educational outcomes. -

5. Insights for Data-Driven Teaching

By analyzing patterns in student performance, generative AI provides actionable insights for teachers to identify areas of difficulty and adapt instructional strategies. This responsive approach enhances the learning experience and addresses challenges proactively.

Privacy and Accessibility Challenges in Using Generative AI in Education

The integration of generative AI (GenAI) in education offers transformative opportunities, but it also raises significant challenges, particularly concerning data privacy and equitable accessibility. Addressing these issues is crucial to ensure ethical and effective use of AI tools in learning environments.

Privacy Concerns

-

1. Data Vulnerability and Leakage

Generative AI models require vast amounts of data for training, including sensitive and proprietary information from educational institutions. Improper handling or lack of robust security measures increases the risk of data leakage, potentially leading to misuse or exploitation of confidential information. -

2. Susceptibility to Cyberattacks

GenAI systems are vulnerable to model inversion attacks, where malicious entities reverse-engineer AI models to extract sensitive training data. Such attacks pose a risk to the privacy of organizational data, including academic records, research details, and internal operations. -

3. Transparency Issues

The complex computations behind generative AI make it challenging to trace how data is processed and used. This lack of transparency can lead to non-compliance with privacy regulations and undermines trust in AI systems. -

4. Fabricated Content Risks

GenAI's ability to create deep fakes or misleading content, such as altered academic records or fabricated educational material, poses risks to institutional credibility. It also complicates verifying the authenticity of content within educational systems.

Accessibility Challenges

-

1. Digital Divide

While AI tools promise to enhance learning, not all students have equal access to the required technology and infrastructure. This digital gap can exacerbate existing inequalities, leaving disadvantaged communities without the benefits of AI-driven education. -

2. Bias in Training Data

Generative AI models reflect the biases present in their training datasets, which can result in unfair outcomes for specific groups of students. For example, language or cultural biases might limit accessibility for non-native speakers or minority communities. -

3. Support for Differently Abled Learners

Though AI has the potential to assist learners with disabilities, inconsistent integration of accessibility features can hinder its effectiveness. Institutions must prioritize inclusivity when implementing AI tools.

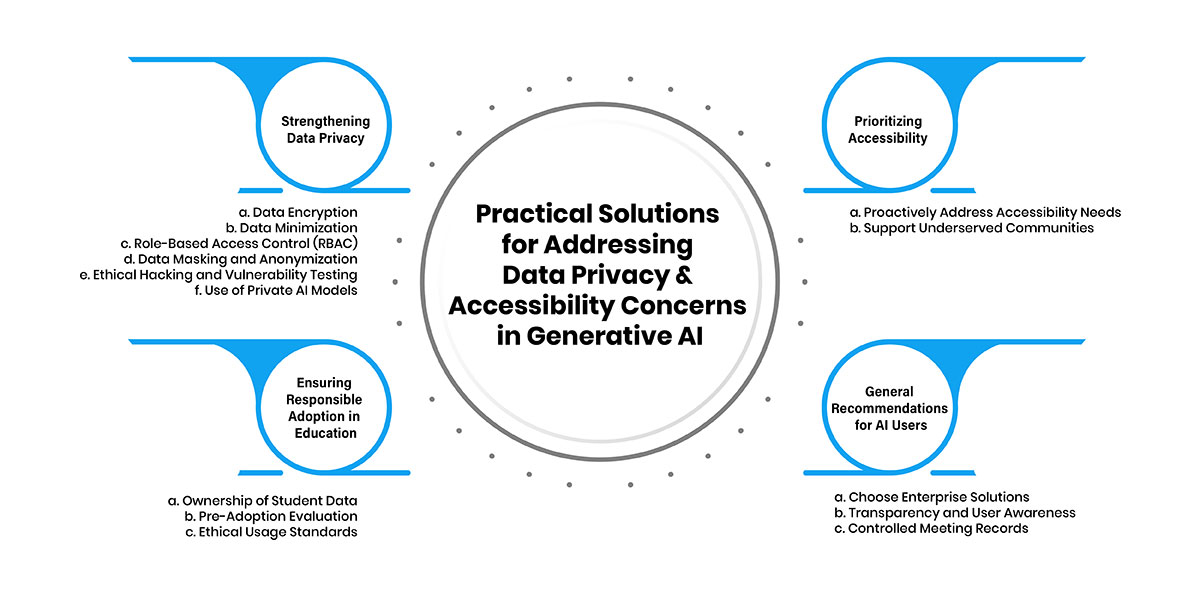

Practical Solutions for Addressing Data Privacy and Accessibility Concerns in Generative AI

Generative AI has revolutionized various domains, including education and business, but it also raises significant privacy and accessibility challenges. Effective strategies are essential to address these issues while advancing innovation.

1. Strengthening Data Privacy

To mitigate privacy risks, the following practices are essential:

-

a. Data Encryption

Robust encryption mechanisms ensure that sensitive data is secured during transit and storage. This makes unauthorized access futile without decryption keys. -

b. Data Minimization

Restricting data usage to only what is necessary for training AI models minimizes the risk of exposing sensitive information. This approach reduces the impact of potential breaches. -

c. Role-Based Access Control (RBAC)

Access should be granted only to authorized individuals based on their roles. Two-factor authentication and clear permissions policies further safeguard sensitive data. -

d. Data Masking and Anonymization

Replacing identifiable data with pseudonyms or general values during AI model training ensures that personal information remains confidential. -

e. Ethical Hacking and Vulnerability Testing

Regular penetration testing and vulnerability assessments identify and fix security weaknesses, protecting organizational and user data from exploitation. -

f. Use of Private AI Models

Private Large Language Models (LLMs) modified to an organization's infrastructure allow data to remain within a controlled environment, reducing exposure risks and enabling customized security protocols.

2. Ensuring Responsible Adoption in Education

The adoption of GenAI in schools requires additional safeguards:

-

a. Ownership of Student Data

Educational institutions must avoid using generic AI tools that may process or use student data for commercial purposes. Schools should prioritize AI tools developed explicitly for educational purposes, ensuring compliance with legal standards. -

b. Pre-Adoption Evaluation

Before implementing AI tools, institutions should verify their security measures, privacy policies, and terms of use. Piloting tools with staff rather than students minimizes premature exposure of sensitive student data. -

c. Ethical Usage Standards

Various organizations advocate for frameworks that prioritize safety, accountability, fairness, equity, and efficacy (SAFE). Educational institutions should rely on such guidance to choose tools that align with ethical principles.

3. Prioritizing Accessibility

To bridge the digital divide, organizations and institutions should:

-

a. Proactively Address Accessibility Needs

From the start, ensure AI tools meet accessibility guidelines such as ADA standards. Involve diverse stakeholders in testing to make necessary adjustments for inclusivity. -

b. Support Underserved Communities

Provide essential infrastructure and resources, such as affordable devices and internet access, to underserved students to ensure equitable participation in AI-driven learning.

4. General Recommendations for AI Users

For individuals and organizations using GenAI, these practices enhance privacy and security:

-

a. Choose Enterprise Solutions:

These often come with contractual protections, enhanced security features, and real-time support. -

b. Transparency and User Awareness:

AI developers should clearly disclose how data is collected and used, provide opt-out mechanisms, and integrate privacy-enhancing technologies from the design phase. -

c. Controlled Meeting Records:

For meetings involving AI transcription, limit its use to non-sensitive discussions and notify participants about AI involvement. Restrict access to meeting records and ensure compliance with retention policies.

Conclusion

The integration of generative AI in education presents a dynamic opportunity to enhance learning, teaching, and administrative processes. However, its successful implementation requires careful attention to privacy concerns and accessibility challenges. By adopting robust privacy safeguards and prioritizing inclusivity, schools can unlock the full potential of AI while protecting students' rights and ensuring equitable access to educational resources. As the technology continues to evolve, ongoing understanding and support between educators, developers, and policymakers will be essential to address emerging concerns and maximize the benefits for all students.